Incrementality testing is a lot like taking a snapshot with an instant camera. It captures a single moment with seemingly perfect clarity, but tells you nothing about what happened before or after that exact instant. Many marketers have become fixated on these snapshots, believing they provide the most accurate picture of marketing effectiveness, while overlooking how quickly the scene changes once the photo is developed. Just as a single photograph can’t tell you how a story unfolds over time, incrementality testing can’t reveal how marketing effectiveness evolves across different seasons, competitive environments, or consumer trends.

In today’s increasingly complex marketing landscape, measurement methodologies have taken center stage. As budgets tighten and C-suites demand more accountability, marketers are under pressure to prove the true value of their spend. In this context, incremental Return on Ad Spend (iROAS) has emerged as a seductive solution, promising to reveal which marketing efforts truly drive incremental business value. But does it deliver on this promise? Let’s explore what iROAS actually is, why it’s perceived as valuable, and—most importantly—its critical limitations that every marketer should understand.

What is iROAS and how does it compare to traditional ROAS?

Incremental Return on Ad Spend (iROAS) measures the additional revenue generated by marketing activities compared to what would have happened without those activities. Unlike traditional ROAS, which simply divides revenue by spend without considering what sales might have occurred organically, iROAS attempts to isolate the true causal impact of your marketing efforts. The concept is compelling: wouldn’t you want to know exactly how much additional revenue your campaigns are driving above what would have happened anyway?

This methodology typically relies on incrementality testing, often through geo-testing approaches. In these tests, marketers select certain geographic regions to receive marketing treatments (like ads or promotions) while “control” regions receive no exposure. By comparing the performance between these regions, marketers attempt to calculate the “lift” or incremental impact of their marketing activities.

Traditional ROAS, by comparison, takes a much simpler approach. It divides attributed revenue by marketing spend, typically using last-click or multi-touch attribution to determine which channels get credit for sales. This makes it easier to calculate but potentially less accurate if customers would have purchased without seeing your marketing campaigns. The perceived promise of iROAS is that it will cut through this uncertainty to reveal the true incremental value of your marketing investments.

However, this seemingly straightforward calculation masks considerable complexity and potential for error. The difference between traditional ROAS and iROAS isn’t just methodological—it represents fundamentally different ways of thinking about marketing effectiveness and can lead to dramatically different strategic decisions.

Why iROAS is perceived as important

Many marketers have embraced iROAS, some considering it the “gold standard” of marketing measurement, and it’s not hard to understand why. In an era where data privacy changes have eroded the effectiveness of traditional attribution methods, the appeal of a methodology that promises to reveal the true causal impact of marketing spend is undeniable. Marketers are increasingly asked not just to report on performance, but to prove the value of their investments in driving incremental business growth.

The concept of incrementality addresses one of marketing’s oldest challenges: determining which sales would have happened anyway versus those truly driven by marketing efforts. For brands with established market presence or strong organic traffic, this distinction is crucial. Without understanding incrementality, you might continue pouring money into campaigns that appear successful but are actually reaching customers who would have purchased regardless.

This perception has been reinforced by influential platforms and measurement providers. Facebook/Meta, for instance, has published research on incrementality testing (though interestingly, they’ve also published studies questioning its accuracy in certain contexts). The methodology has gained further credibility through adoption by sophisticated marketing organizations and endorsement by industry thought leaders, creating an all too familiar pressure that can make marketers feel they’re falling behind if they’re not measuring incrementality.

The theoretical appeal is clear: in a perfect world, incrementality testing would eliminate all the guesswork from marketing measurement, allowing you to optimize your budget with complete confidence in the incremental return of each dollar spent. But as we’ll explore next, reality falls considerably short of this ideal.

Key limitations of iROAS

The promise of incrementality testing is compelling, but the practical limitations are significant and often overlooked. Before building your measurement strategy around iROAS, it’s crucial to understand these constraints. Marketers who dive into incrementality testing without recognizing these limitations often end up with misleading results that can drive poor decision-making.

Methodology limitations

The most fundamental challenges lie in the methodology itself, particularly when using geo-testing approaches:

- Control group validity: Establishing proper control groups is nearly impossible in real-world scenarios. Even if you carefully match demographics between test and control regions, no two markets are truly identical. The consumer behavior in New York fundamentally differs from that in San Francisco or Chicago, even among demographically similar populations.

- Regional variability: No two regions or cities have identical consumer behaviors, even with matching demographics. Think about how differently people order food in New York versus San Francisco, or how seasonal patterns affect shopping behaviors across different climates.

- External confounding factors: Regional markets are constantly exposed to external factors that can dramatically skew results. A local event, weather pattern, or economic shock might impact one region but not another, creating artificial differences in performance that have nothing to do with your marketing activities.

- Cross-contamination of test groups: Users can move between testing and control zones, particularly in our increasingly mobile society. While not a huge occurrence, it cannot be controlled for and introduces additional noise into the results.

Implementation and interpretation limitations

Even when the methodology is sound, the implementation and interpretation of incrementality tests present additional challenges:

- Accuracy paradox: Incrementality tests can produce the right answers even when improperly designed—and wrong answers even when properly designed. This creates a dangerous false confidence, as marketers have no reliable way to validate whether their test results reflect reality or random chance.

- Point-in-time constraints: Incrementality tests only provide insights for a specific moment in time. Marketing effects are dynamic and change with seasons, competitive activity, and evolving consumer behavior. A campaign that shows strong incremental performance in January might show weak performance in June for reasons unrelated to the campaign itself.

- Limited actionability: Even when conducted properly, incrementality tests often don’t provide truly actionable insights. They might tell you if a channel is working incrementally, but not how to optimize it or how it interacts with other channels in your mix.

- Difficult validation: Without a complementary measurement methodology, it’s nearly impossible to validate the results of an incrementality test. This creates a circular problem where you can’t be sure if your test results are accurate without another way to measure performance.

Cost-effectiveness concerns

Beyond methodological and implementation challenges, there’s the significant question of cost-effectiveness. Incrementality tests are expensive to run properly, requiring substantial investments in experimental design, data collection, and analysis. Given the limitations outlined above, the actionable insights gained often don’t justify this expense. Many marketers find themselves with high-priced test results that don’t actually help them make better decisions about where to invest their next marketing dollar. When you factor in the opportunity cost of potential misallocations based on flawed test results, the true cost of incrementality testing can be much higher than the sticker price.

Additionally, incrementality tests typically need to be run repeatedly to account for changing market conditions, seasonal variations, and evolving consumer behavior. This ongoing expense can quickly add up, especially for brands with complex marketing mixes that would require multiple tests across different channels and campaigns. Before investing in incrementality testing, it’s critical to consider whether the potential insights justify the significant and recurring costs involved.

The challenge of validation

How do you know if your incrementality test results are accurate? This question highlights perhaps the most troubling aspect of iROAS measurement. Without a reliable way to validate your results, you’re essentially operating on faith that your testing methodology is sound and your results reflect reality rather than statistical noise or experimental artifacts.

This validation challenge is more serious than it might initially appear. Marketing decisions based on flawed incrementality measurements can lead to substantial misallocations of budget, potentially costing far more in opportunity cost than the price of the tests themselves. Yet many marketers proceed with confidence based solely on the fact that they’ve conducted an incrementality test, without questioning the results.

One promising approach to this validation challenge is to use marketing mix modeling (MMM) as a complementary methodology. Unlike incrementality testing, MMM takes a holistic view of marketing effectiveness, analyzing how all factors collectively influence business outcomes. An MMM can act like a gut check, refocusing marketers on the big picture when they may feel inclined to assume an incrementality test results extrapolate beyond that moment in time.

Cross-validating your incrementality tests with an MMM can provide a more nuanced and reliable understanding of marketing effectiveness while mitigating the risk of making decisions based on flawed measurements.

Alternatives and complementary approaches

Given the limitations of incrementality testing, marketers should consider complementary measurement approaches that provide a more complete view of marketing effectiveness. This isn’t about replacing incrementality testing entirely—it’s about developing a comprehensive measurement strategy that leverages the strengths of different methodologies while compensating for their respective weaknesses.

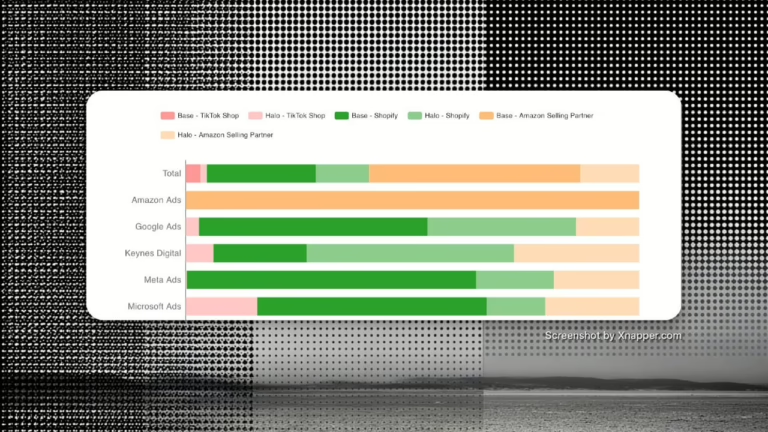

Marketing mix modeling stands out as a particularly valuable complement to incrementality testing. While incrementality tests focus on isolating specific campaigns in controlled experiments, MMM takes a holistic approach, analyzing how all marketing activities interact with each other and with non-marketing factors. This comprehensive view is especially valuable for understanding complex effects like halo impacts and long-term brand building activities that are difficult to capture in short-term incrementality tests.

Wrapping it up…

Incrementality testing and iROAS represent a well-intentioned attempt to solve one of marketing’s most persistent challenges: understanding the true causal impact of marketing activities. In theory, these approaches offer a path to more accurate measurement and more effective budget allocation. In practice, however, they often fall short of this promise due to methodological limitations, validation challenges, and the inherent complexity of modern marketing environments.

This doesn’t mean we should abandon the pursuit of incrementality insights altogether. Rather, we should approach incrementality testing with appropriate skepticism and a clear understanding of its limitations. By using iROAS as one input among many—validated against complementary methodologies like MMM—marketers can develop a more nuanced and reliable understanding of marketing effectiveness.

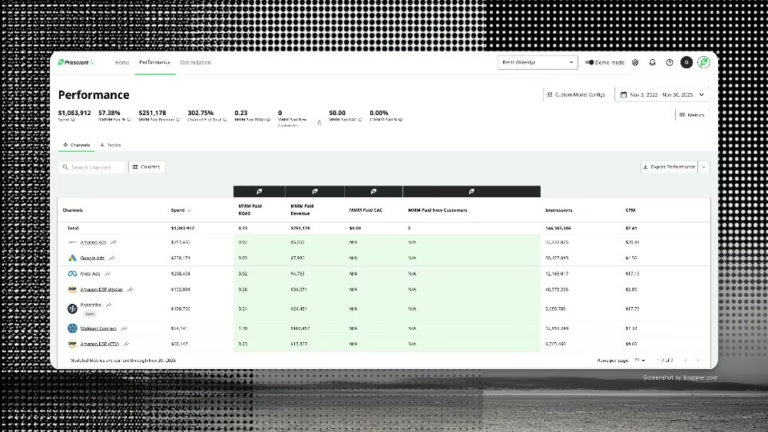

As privacy changes continue to reshape marketing measurement, successful brands will embrace integrated approaches rather than relying on any single methodology like iROAS. Prescient AI’s marketing mix modeling offers a powerful solution to this challenge—providing daily, campaign-level insights without the limitations of incrementality testing. By combining the holistic view of MMM with the granularity modern marketers need, Prescient empowers you to make confident decisions about your marketing investments in an increasingly complex landscape.