Your MMM results just landed. Facebook looks way stronger than you expected. TikTok looks weaker. That campaign you thought was crushing it? The model says it’s mediocre.

So what do you do? Trust the model and shift millions in budget based on data that doesn’t quite pass the sniff test? Or ignore the expensive measurement tool you’re paying for and go with your gut?

Most marketers are forced to choose between blind faith in their measurement tool or ignoring it entirely. Both options suck.

What if you could actually test whether your measurement approach is giving you accurate insights? Pressure test different configurations before betting your budget on them?

That’s exactly what we built.

Your MMM breaks when it doesn’t match how your business really works

Here’s what keeps happening. You’re sitting on multiple data sources that all tell different stories. Your incrementality tests say one thing. Post-purchase surveys say another. Your MTA platform has its own perspective. Your MMM gives you yet another view.

Too many numbers, no clear answer. So decisions grind to a halt and budget stays exactly where it is. Because nobody wants to make a major allocation shift when the data is all over the place.

Then there’s the trust issue. When you can’t see how your own data shapes the scenarios you’re evaluating, you second-guess everything. That makes you pull back on the big swings that could actually move the needle. You play it safe because confidence is low.

And if you’re an omnichannel brand? Your models diverge from reality completely. Separate, rarely updated models for ecommerce, retail, Amazon, and your other channels never add up to one clear view of where to invest more. You’re stitching together insights from different tools that weren’t built to work together.

The stakes here are real. When measurement is wrong, budget decisions are wrong. You underfund your best channels because the model can’t see their value. You overfund channels that are just capturing demand others created. You kill campaigns that are actually working and scale campaigns about to hit saturation.

Real dollars = real consequences.

Most MMMs are asking for blind faith

The typical MMM platform gives you results and expects you to trust them. They won’t show you what assumptions drive their results. (We published our assumptions if you want to read them.) You can’t see under the hood. In fact, they rarely know what’s under the hood because they’re using an off-the-shelf solution. You definitely can’t adjust anything. You’re just supposed to trust that their model understands your specific business.

Some platforms allow some model configuration. But full transparency and the ability to systematically compare different approaches? That’s rare.

Here’s why this happens. Every MMM makes assumptions about your business. Some incorporate external data like incrementality tests or surveys. Others don’t. Different modeling approaches capture different effects. And there’s no single “right” answer that works for every brand.

Your business might have unique dynamics that default model assumptions don’t capture. But if you can’t test that, you’ll never know.

Validation Layer lets you test before you commit

We built Validation Layer to solve exactly this problem. It lets you configure your model’s assumptions, compare performance across configurations, and choose the version that best fits your brand while preserving model health.

Here’s how it actually works: You start with your existing Prescient model as your baseline. Then you configure comparison models that incorporate your additional data or assumptions. Maybe that’s your incrementality test results. Maybe it’s insights from post-purchase surveys. Maybe it’s your MTA platform data. Maybe it’s custom assumptions about how your business works.

You run these models side by side against your historical performance. Compare accuracy. Look at patterns. See which approach gives you insights that actually match reality.

Then you choose the modeling approach that best reflects your business. Based on evidence, not blind faith.

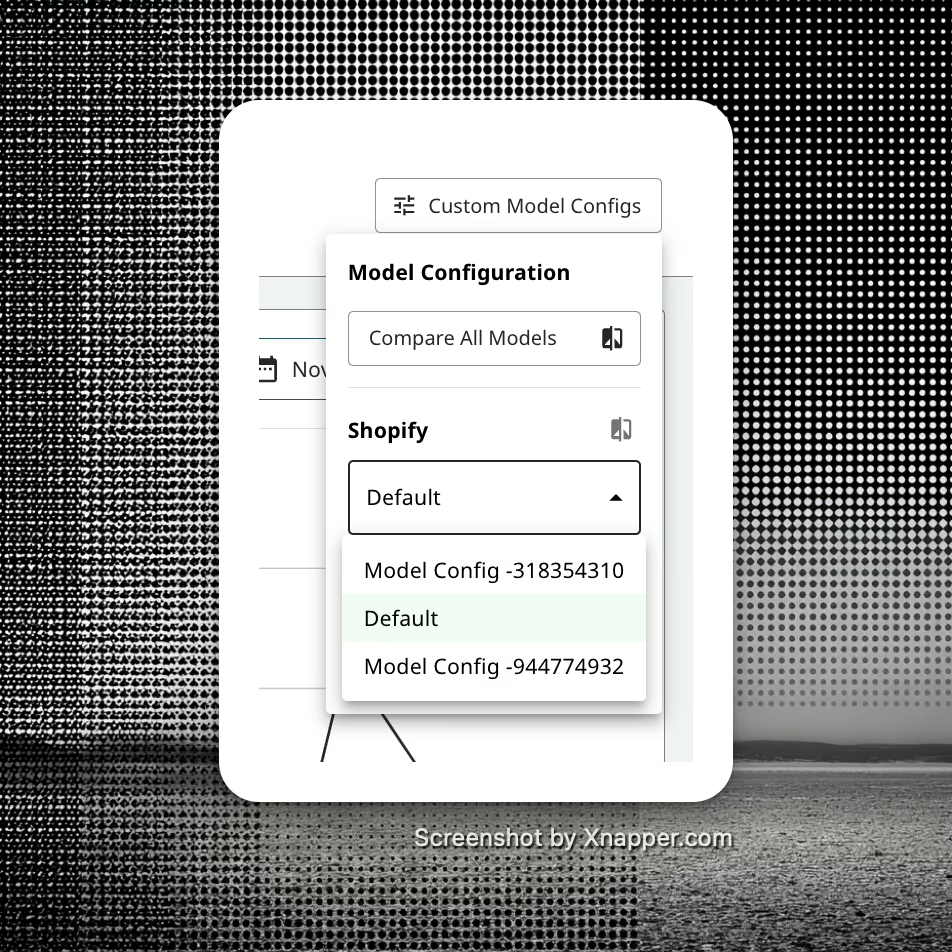

Choose between default and custom configurations

The first step is deciding what you want to test. Your default Prescient model gives you one perspective. But you might have incrementality test data suggesting different channel performance. Or survey responses indicating customers discovered you through different paths than your attribution shows.

Configure alternative models that incorporate these data sources. See what changes when you weight your model toward different assumptions.

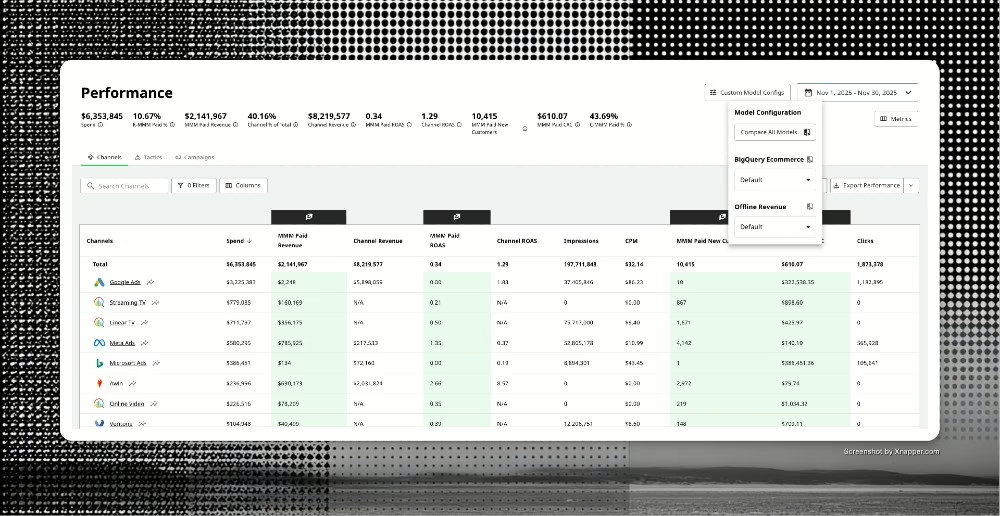

Analyze options with side-by-side model comparisons

This is where it gets interesting. You’re not just looking at one set of results and hoping they’re right. You’re comparing multiple approaches directly.

How does accuracy change when you incorporate incrementality data? Does your survey-weighted model better predict actual outcomes? Which configuration gives you more actionable insights for the decisions you need to make?

Side-by-side comparison removes the guesswork. You can see objectively which approach serves your business better.

Own your model with assumption tuning

This is about making the model truly yours, not just your vendor’s. You control the story the model tells about your business and channels instead of being stuck with a one-size-fits-all setup.

Adjust your assumptions for seasonality, promotions, and new channels. Test whether your understanding of how your business works improves model performance. Make your plan more stable and your big swings feel safer because you’ve validated the foundation.

Stay safe with guardrails powered by input validation diagnostics

We’re not giving you enough rope to hang yourself with here. Validation Layer includes guardrails that preserve model health while you experiment with configurations.

Input validation diagnostics flag when assumptions would break the model or introduce statistical problems. You get freedom to customize within boundaries that keep your measurement sound.

What this actually means for how you operate

The philosophy here is simple: trust but validate. We’re confident in our modeling approach, but we’re not arrogant enough to think one approach fits every business perfectly. Some brands have unique data that should inform their specific models.

We’d rather give you tools to validate and customize than demand blind faith. That’s how you build actual trust.

- Take bigger swings that actually grow revenue and efficiency. You tune the model to how your brand really works. Then, when you lean into a channel or test something new, both you and your finance partner trust the upside.

- Make decisions faster with better alignment. Compare configurations until you find the approach that gives you the clearest, most actionable insights. This ends the standoffs between conflicting data sources. You’re not choosing which tool to believe anymore. You’re testing which approach actually works better for your business.

- Control your own narrative. You control the story the model tells about your business and channels. The model works how your business works, not how some generic template thinks businesses work.

This isn’t about finding one universal truth

There isn’t one perfect model for every business. Your business has unique dynamics. What works for one brand might not work for another. The goal isn’t to find some universal truth about marketing. It’s to find the modeling approach that best captures YOUR reality.

You’re not blindly accepting default assumptions. You’re not ignoring expensive data you’ve collected. You’re systematically testing what works for your specific business.

This only works because Prescient’s underlying MMM is already built differently. Campaign-level granularity means you can validate at the level where you actually make decisions. Daily updates mean you’re testing with recent, relevant data. Our proprietary modeling approach means you’re starting from a strong baseline. Validation Layer makes a great foundation even better.

Ready to pressure test your measurement?

For current Prescient clients, Validation Layer is available now in your dashboard. Start with comparing your default model against one scenario you’ve been questioning. Your Customer Success Manager can help you think through what to test first.

If you’re not using Prescient yet but this resonates, we should talk. You’re probably making budget decisions based on measurement you can’t validate right now. Book a demo to see how Validation Layer works. We’ll show you how to test your approach before betting your budget on it.

Because when you’re making decisions that affect millions in marketing spend, you shouldn’t have to choose between blind faith and flying blind.